Google Cloud Vision API provides a REST API for developers to understand the contents of images. In my personal experience, it is currently the best working solution for object detection from images, compared to custom trained HAAR/LBP classifiers or IBM’s Watson. Although it is still in beta, it provides very good results with object detection problems. Currently the API provides the following types of algorithms, each specific to a feature type.

| Functionality | Description |

|---|---|

| LABEL_DETECTION | Execute Image Content Analysis on the entire image and return |

| TEXT_DETECTION | Perform Optical Character Recognition (OCR) on text within the image |

| FACE_DETECTION | Detect faces within the image |

| LANDMARK_DETECTION | Detect geographic landmarks within the image |

| LOGO_DETECTION | Detect company logos within the image |

| SAFE_SEARCH_DETECTION | Determine image safe search properties on the image |

| IMAGE_PROPERTIES | Compute a set of properties about the image (such as the image’s dominant colors) |

The following example uses curl

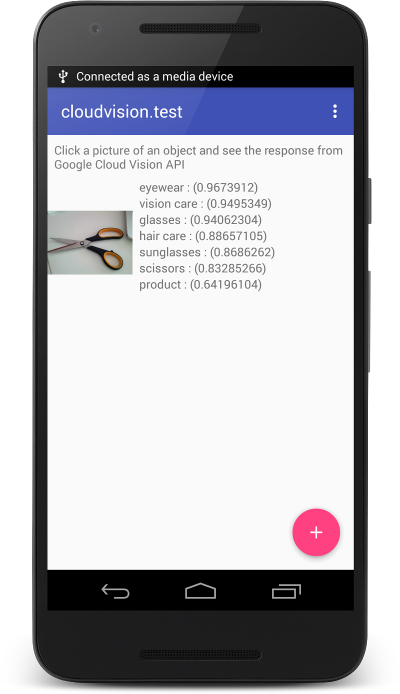

The API_KEY can be obtained from your Google Cloud Platform Console. You may get a browser key, as it can also be used with Android. The image needs to be base64 encoded before making the request. Base64 gives you a string, and you may use it directly in the request. Alternatively, you can use Google Cloud Storage URLs, if you have hosted your images on Cloud Storage buckets. Check my test image and the Cloud Vision API response below.

Test Image

Response

{

"responses": [

{

"labelAnnotations": [

{

"mid": "/m/09j2d",

"description": "clothing",

"score": 0.99011743

},

{

"mid": "/m/083jv",

"description": "white",

"score": 0.92788029

},

{

"mid": "/m/06rrc",

"description": "shoe",

"score": 0.91207343

},

{

"mid": "/m/09j5n",

"description": "footwear",

"score": 0.89330035

},

{

"mid": "/m/0fly7",

"description": "jeans",

"score": 0.75597358

},

{

"mid": "/m/017ftj",

"description": "sunglasses",

"score": 0.71857065

},

{

"mid": "/m/07mhn",

"description": "trousers",

"score": 0.70007712

}

]

}

]

}I’m using Retrofit to work with the API. The following is my API interface.

/**

* Created by napster on 25/02/16.

*/

public interface GoogleCloudVisionApi {

@POST("/images:annotate")

LabelsResponse detectObjects(@Query("key") String apikey, @Body ReqWrapper reqWrapper);

}As you can see, I’m using a @Body type, as I’m wrapping the request object as a plain old java object.

/**

* Created by napster on 25/02/16.

*/

public class ReqWrapper {

public Request[] requests;

public ReqWrapper(Request[] requests) {

this.requests = requests;

}

public static class Request {

public Image image;

public Feature[] features;

public Request(Image image, Feature[] features) {

this.image = image;

this.features = features;

}

}

public static class Image {

public String content;

public Image(String content) {

this.content = content;

}

}

public static class Feature {

public String type;

public int maxResults;

public Feature(String type, int maxResults) {

this.type = type;

this.maxResults = maxResults;

}

}

}Now, create an API connecter, declare required permissions in the manifest, and add a camera intent to capture random test images from around you. This is a good test case since, it actually reveals the capabilities of the Google Cloud Vision system, since the images are mostly noisy, and completely unknown to Google’s ecosystem (such as Google Images). Here is what I’ve got.

You can read more about the Google Cloud Vision API here.